Page 31 - Use cases and requirements for the vehicular multimedia networks - Focus Group on Vehicular Multimedia (FG-VM)

P. 31

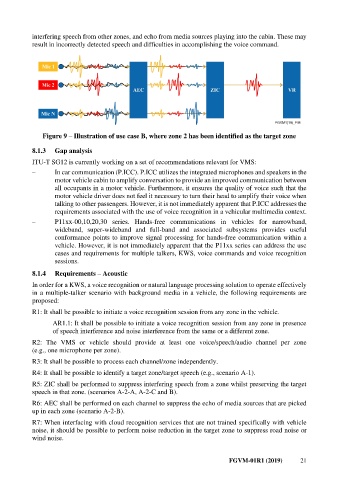

interfering speech from other zones, and echo from media sources playing into the cabin. These may

result in incorrectly detected speech and difficulties in accomplishing the voice command.

Figure 9 – Illustration of use case B, where zone 2 has been identified as the target zone

8.1.3 Gap analysis

ITU-T SG12 is currently working on a set of recommendations relevant for VMS:

– In car communication (P.ICC). P.ICC utilizes the integrated microphones and speakers in the

motor vehicle cabin to amplify conversation to provide an improved communication between

all occupants in a motor vehicle. Furthermore, it ensures the quality of voice such that the

motor vehicle driver does not feel it necessary to turn their head to amplify their voice when

talking to other passengers. However, it is not immediately apparent that P.ICC addresses the

requirements associated with the use of voice recognition in a vehicular multimedia context.

– P11xx-00,10,20,30 series. Hands-free communications in vehicles for narrowband,

wideband, super-wideband and full-band and associated subsystems provides useful

conformance points to improve signal processing for hands-free communication within a

vehicle. However, it is not immediately apparent that the P11xx series can address the use

cases and requirements for multiple talkers, KWS, voice commands and voice recognition

sessions.

8.1.4 Requirements – Acoustic

In order for a KWS, a voice recognition or natural language processing solution to operate effectively

in a multiple-talker scenario with background media in a vehicle, the following requirements are

proposed:

R1: It shall be possible to initiate a voice recognition session from any zone in the vehicle.

AR1.1: It shall be possible to initiate a voice recognition session from any zone in presence

of speech interference and noise interference from the same or a different zone.

R2: The VMS or vehicle should provide at least one voice/speech/audio channel per zone

(e.g., one microphone per zone).

R3: It shall be possible to process each channel/zone independently.

R4: It shall be possible to identify a target zone/target speech (e.g., scenario A-1).

R5: ZIC shall be performed to suppress interfering speech from a zone whilst preserving the target

speech in that zone. (scenarios A-2-A, A-2-C and B).

R6: AEC shall be performed on each channel to suppress the echo of media sources that are picked

up in each zone (scenario A-2-B).

R7: When interfacing with cloud recognition services that are not trained specifically with vehicle

noise, it should be possible to perform noise reduction in the target zone to suppress road noise or

wind noise.

FGVM-01R1 (2019) 21